A recent post over at Cloudinary shows off some of the neat things you can do with HTML’s new element and Cloudinary’s cloud-based image management service. In a nutshell, it describes methods for applying different crops to the same image based on the aspect ratio of an image container given any state of a responsive layout. So as the image container gets wider, for instance, the picture gets cropped into a shallower aspect ratio so as to avoid pushing the content below it further down.

A recent post over at Cloudinary shows off some of the neat things you can do with HTML’s new element and Cloudinary’s cloud-based image management service. In a nutshell, it describes methods for applying different crops to the same image based on the aspect ratio of an image container given any state of a responsive layout. So as the image container gets wider, for instance, the picture gets cropped into a shallower aspect ratio so as to avoid pushing the content below it further down.

Where it gets interesting is when Cloudinary’s parameter comes into play. That allows the cropping to be done in an intelligent—or less dumb—way. The developer can specify that the image gets cropped from the top before the bottom, from the left before the right, etc. That’s a powerful tool for designers; being able to define how an image is viewed in different scenarios allows a responsive layout to better maintain fidelity to the designer’s original intent. Essentially, it brings back some measure of the control that visual designers relinquished in the digital realm.

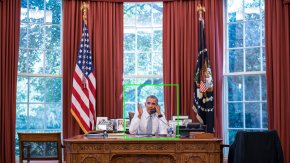

Even more interesting, the gravity parameter takes a face detection variable which builds even more intelligence into the cropping. Cloudinary can detect the presence and location of a single face or even multiple faces and make sure that they are preserved in any given crop (within the limits of practicality). No more heads lopped off unceremoniously.

This stuff is still fairly rudimentary as far as making computer vision practical and commercially viable, but I find it very exciting. There are many interesting roads ahead for user experience design, but the notion of content-aware layouts particularly holds a lot of promise. The templates that designers create for web sites and apps today are more or less ignorant of the content that they’re intended to deliver to users. To be sure, designers build in as many smarts as they can—if we know that a given image container is usually going to contain portrait-oriented images, we shape the layout accordingly.

But in the future we’ll be able to define truly responsive systems that react not just to the size of the viewport—the box, so to speak—but also the content—what’s in the box. Given time, rich image services like Cloudinary and Imgix (which also supports face detection) will be able to extract color information directly from an image and offer up complementary palettes, identify the best spots to place type on an image, automatically wrap text around an image silhouette, automatically adjust the color and shadow of type for maximum legibility given an image, and so many more possibilities. That will make the designer’s job a bit harder in that there will be many more scenarios to account for when designing a solution. But that’s been the trend for designing in digital media anyway: every five years or so, the problem gets an order of magnitude more complex At the same time though the solutions become orders of magnitude more meaningful for users, too.

YOU MIGHT ALSO LIKE